Black-Box Agent Testing with MCP

August 7, 2025tl;dr: I propose a method for testing agents by defining tasks and expected outputs via an MCP server.

Note: this article assumes some familiarity with the Model Context Protocol (MCP)

There's no universally agreed-upon definition for AI agents. My personal definition is something like: "an AI agent is software that uses AI models to take autonomous actions." This definition leaves a lot of room for flexibility about what agents actually look like. For example, the following attributes may differ among agents:

- What programming language(s) is the agent written in?

- What software environment(s) does the agent run in?

- What sort of hardware does the agent run on?

- What sort of tools or interfaces does the agent have access to?

- What sort of persistence layer(s) or forms of memory does the agent have?

- At what time scale(s) or speed(s) does the agent operate?

- Which AI model(s) does the agent use? Are they LLMs, or other types of models?

- etc

In agent testing, we want to compare many different agents to each other in terms of behavior and capabilities. This means we should design testing strategies that are agnostic to as many of these details as possible. In other words, we should write tests that focus on what an agent does (or can do), rather than the precise details of how it operates. If we do this successfully, this will allow AI developers to "plug in" agents to arbitrary test suites, with little-to-no adaptation required!

With this in mind, let's design a testing strategy that we can use to do this sort of black-box testing.

Designing a Testing Strategy #

Our design goals are:

- The test program should require as little information about the agent as possible.

- The agent should require as little information about the test program as possible.

To facilitate this, we'll need some standard interface through which the tests and agent can communicate. Text streams and HTTP are two good low-level options; however, they both need additional context for how to interpret and exchange messages between systems. Luckily, the Model Context Protocol (MCP) was designed for this exact problem-- with it, we can define interfaces in one system and make them discoverable by another system, without prior knowledge! Additionally, MCP is quickly becoming a standard for inter-process communication for AI agents, so we can expect it to be supported by many agents out of the box.

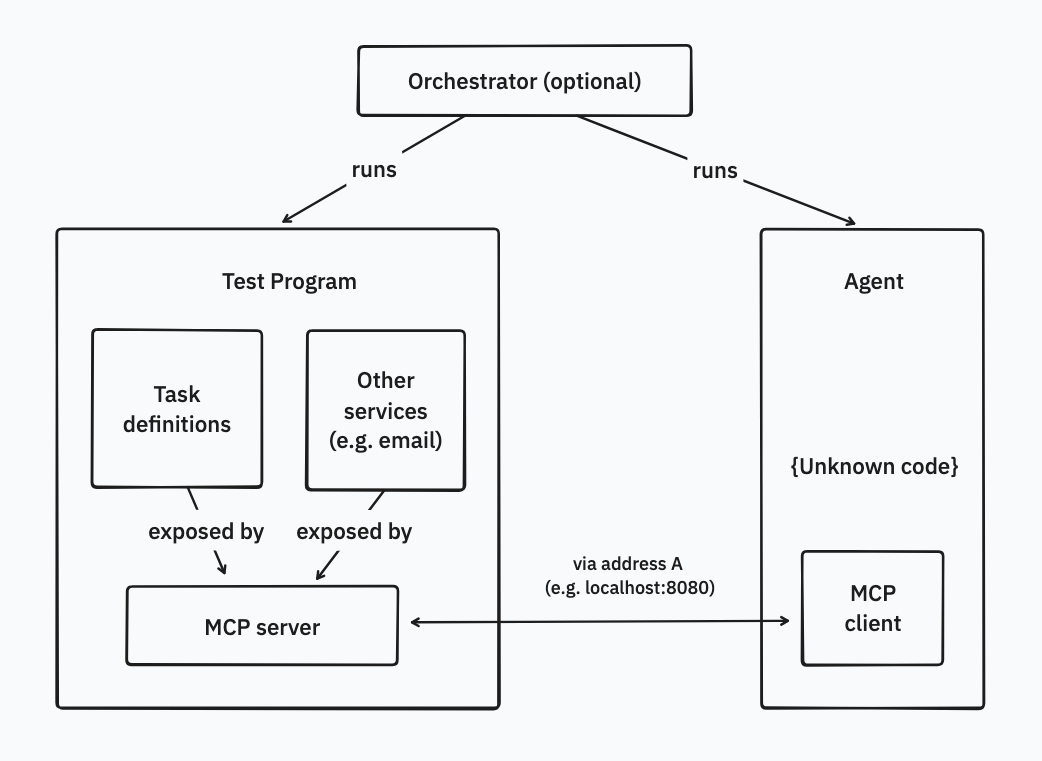

Now, let's use MCP to connect a test harness and an agent. We'll have:

- A test MCP server, listening at some address

A, that defines tasks, inputs and desired outputs for agents - An agent, running separately, that checks for an MCP server at address

Aand accomplishes any given tasks as directed - Optional: an orchestrator to start and stop both processes

Here's a diagram of the system:

Notably, the only shared information between systems is the address where the MCP server is running. The test MCP server doesn't know any details about the agent; it doesn't even know whether the agent is running locally or remotely, it simply expects that the agent will communicate via that address. Likewise, the agent doesn't know any details about the test server other than the MCP address.

In addition to task definitions, the test harness can provide auxiliary MCP services that will be discoverable by the agent. These are particularly useful for input and output. For example, a task might be: "Answer the unread emails in my inbox." Accordingly, the test MCP server should provide tools for the agent to read and write (mock) emails.

Code Example #

Expanding on the prior example: let's say we want to test an agent's capability to leave an out-of-office reply. We'll define a task list that includes this task, and a mock email service that will be used to check the agent's output.

# email_test.py

MCP_ADDRESS = "http://localhost:8080"

@test("Agent can leave an out-of-office reply")

async def test_ooo():

FAKE_EMAIL_ADDRESS = "[email protected]"

# Define tasks

task_list = TaskList(

["Please respond to any unread emails with an out-of-office reply."]

)

# Define mock email service

email_service = MockEmailService()

email_service.add(FAKE_EMAIL_ADDRESS, {

"subject": "Hello",

"body": "This is a test."

})

# Start MCP server and wait for tasks to complete

mcp = mcp_server([task_list.mcp, email_service.mcp])

mcp.start(address=MCP_ADDRESS)

await task_list.wait_for_all_completed(timeout=10)

# Check output

sent_emails = email_service.get_sent_emails()

assert len(sent_emails) == 1

assert sent_emails[0].to == FAKE_EMAIL_ADDRESS

assert is_out_of_office(sent_emails[0].body) # we can use another LLM for this, or a classifierRunning this test against an agent might look like this:

$ python agent.py --mcp-address http://localhost:8080 # Agent starts and waits for MCP server to be available

$ python email_test.py # Test script starts and waits for agent to complete tasks within a time limitThis is a simple example, but it should demonstrate the basic idea of communicating with an agent via MCP. I've added another (working) example here if you'd like to see what the code looks like end-to-end.

Conclusion #

I'm excited about the potential of this approach; I think it opens the door to a lot of interesting agent tests that would be difficult to run otherwise. In fact, this post is primarily a prerequisite to more agent-testing posts I have planned. So, stay tuned for those! In the meantime, if you have any thoughts or ideas for improving this approach, please let me know!

Note: The idea of intercepting emails from agents was inspired by Andon Labs' Vending Bench.